18

AprComputer Vision in AI

Introduction

Artificial Intelligence for Beginners' exciting chapter on computer vision combines the capabilities of AI and visual perception. In order for computers to understand and absorb visual information similarly to how people do, it involves teaching them to "see" and analyze images or movies. This technology, which also constantly extends the boundaries of the possible, has altered both the autonomous vehicle and healthcare industries.

What is computer vision in AI?

Computer vision in AI is fundamentally the capacity of a machine to derive informative data from visual data. It enables machines to emulate human vision by processing, analyzing, and comprehending images and videos. Computer vision systems can identify objects, recognize faces, find patterns, and even comprehend complicated images by using algorithms and deep learning approaches.

The most important aspects are as follows:

- Image Recognition: Recognising and categorizing objects, sceneries, and patterns in photographs is known as image recognition.

- Object Detection and Tracking: Locating & tracking objects in movies for surveillance & autonomous vehicles is known as object detection and tracking.

- Image segmentation: Breaking up images into smaller pieces in order to recognize objects and regions.

- Pose Estimation: Pose estimation is the process of figuring out where and how people or objects are positioned.

- Image generation: the process of producing or changing images utilizing methods like style transfer.

- Scene Understanding: Understanding a scene's context and significance is known as scene understanding.

- Video analysis: The process of tracking objects and identifying actions in videos.

- CNNs and deep learning: Using neural networks to boost performance.

- Dataset Annotation and Training: Large dataset labeling for algorithm training in the context of dataset annotation.

- Applications: Used in areas such as facial recognition, medical imaging, and autonomous cars.

How computer vision works

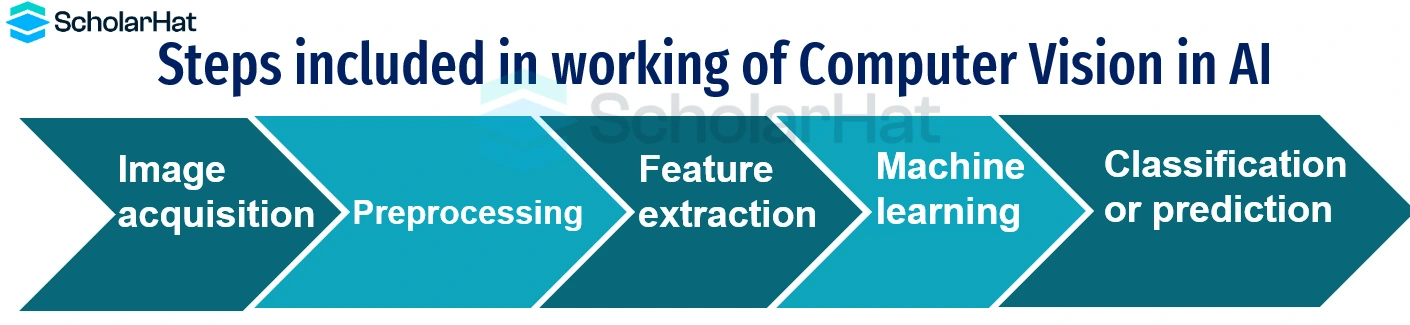

The steps listed below describe how computer vision works:

- Image acquisition: acquiring images using cameras or other imaging equipment.

- Preprocessing: Improving the quality of an image using methods like noise removal or image scaling.

- Feature extraction: Using algorithms in AI, to locate significant traits or patterns in the image.

- Machine learning: The process of analyzing and categorizing extracted features using learned models.

- Classification or prediction: Making judgments or forecasts in light of the data analysis.

The History of computer vision in AI

Following is an overview of history of computer vision:

- Early Uses: Barcode scanning & character recognition were computer vision's first applications.

- Hardware developments: Computer vision capabilities were enhanced by stronger processors and specialized hardware.

- Big Data: Access to annotated datasets, such as ImageNet, improved training data for greater precision.

- Deep Learning: The incorporation of convolutional neural networks (CNNs) transformed object detection and image classification.

- Object Tracking: As computer vision systems improved at tracking and identifying objects, autonomous cars, and surveillance became possible.

- Depth Estimation: 3D reconstruction & depth perception were made possible by methods like structured light and stereo vision.

- Image Creation: Realistic image synthesis & style transfer were made easier by generative adversarial networks (GANs).

- Cross-domain Applications: Robotics, facial recognition, medical imaging, autonomous cars, and other fields have all benefited from computer vision.

- Real-time Performance: For time-sensitive applications, real-time analysis is now possible thanks to enhanced algorithms and technology.

- Ethical Considerations: The ethical development and use of computer vision technology have been driven by worries about privacy, bias, and fairness.

Applications of computer vision

Here are the Applications of computer vision are:

- Health: The analysis of medical imaging, the identification of diseases, and surgical support are all made easier by computer vision.

- Automobile industry: It is essential for autonomous vehicles since it makes obstacle identification and avoidance possible.

- Security Systems: Face recognition & surveillance in security systems both use computer vision.

- Retail: It's used for things like object detection, inventory control, and checkout counters without cashiers.

- Agriculture: Crop monitoring, disease diagnosis, and yield estimation are made easier by computer vision.

- Entertainment: It is used in immersive experiences like augmented reality, virtual reality, and gesture detection.

- Quality Control: Computer vision systems inspect and spot flaws in the production process to assure product quality.

- Robotics: Through the use of computer vision, robots can navigate and manipulate objects in their surroundings.

- Augmented reality: Computer vision enables real-time object tracking and recognition, increasing augmented reality experiences.

- Other: Computer vision has a wide range of uses, including document analysis, environmental monitoring, sports analysis, and assistive technology.

Computer vision challenges

Following are some important computer vision challenges:

- Data Requirements: Large volumes of labeled data are required for training reliable models, but obtaining and annotating these data can be time-consuming and expensive.

- Variation Handling: To achieve reliable performance in real-world circumstances, computer vision algorithms must be able to handle fluctuations in lighting conditions, viewpoints, and occlusions.

- Ethical Considerations: To achieve fair and impartial applications, it is essential to address privacy issues and minimize bias in computer vision algorithms.

- Computational Complexity: Certain computer vision applications, including high-resolution image processing or real-time video analysis, can be computationally demanding and call for powerful hardware and effective algorithms.

- Generalization: In computer vision, it's still difficult to get models to reliably function on data that hasn't been seen.

- Semantic Understanding: Research is still being done to create algorithms that can understand the semantic significance of visual objects and scenes in a way that is human-like.

- Real-time Performance: Efficient algorithms and technology are needed for real-time processing and interpretation of visual data, especially in dynamic contexts.

- Decisions that are easy to understand: Improving the interpretability and explicability of computer vision models is essential for establishing confidence and comprehending how they make decisions.

Best practices for computer vision implementation

Best practices for implementing computer vision

- Define the Problem Statement & Objectives: Clearly state the issue that needs to be resolved and the expected results to inform the choice of algorithm and technique.

- Collect and Curate High-Quality Datasets: For training robust models, collect a variety of well-annotated datasets that accurately reflect the target domain.

- Regular Model Evaluation: To pinpoint areas that need improvement, continuously evaluate model performance using the right evaluation metrics and validation approaches.

- Model fine-tuning: Based on evaluation findings, modify and improve model parameters to improve performance and take into account particular needs.

- Consider Pretrained Models: To make the most of existing information and conserve compute resources, use pre-trained models as a starting point & fine-tune them for particular tasks.

- Deal with Bias and Fairness: To ensure that computer vision systems are fair and that decisions are made without bias, it is important to be aware of potential biases in data and algorithms.

- Keep Up with Research: To enhance system performance and maintain competitiveness, keep abreast of the most recent developments in computer vision research and implement new strategies.

- Efficiency and Scalability: When designing systems, take into account computational and storage limits and evaluate how well they can scale to accommodate big datasets and real-time processing requirements.

- Robustness to Variations: Take into account changes in the lighting, viewpoints, occlusions, and other elements that might have an impact on the system's performance in real-world situations.

- Collaboration and documentation: Encourage teamwork within the team to exchange expertise and guarantee consistency. Maintain full documentation of implementation details, including code, configuration, and training methods.

Resources for further learning and practice

Online tutorials and courses: Explore Additional Learning and Practice Resources with us at https://www.scholarhat.com/training/artificial-intelligence-certification-training. There are numerous online tutorials and courses available that specifically focus on Artificial Intelligence.

Summary

Powerful technology like computer vision has the ability to change many industries and enhance our lives in innumerable ways. Also, our exploration has encompassed a comprehensive understanding of the history of computer vision, Applications of computer vision, and computer vision challenges. Computer vision in AI brings up a world of opportunities by allowing machines to perceive and comprehend visual input. The applications are numerous and interesting, ranging from security to healthcare to transportation. However, it also has its share of difficulties, such as data accessibility and ethical issues. We can fully utilize computer vision and uncover its benefits for a better future by adhering to best practices and keeping up with the most recent developments.