13

DecAzure Data Factory Tutorial : A Detailed Explanation For Beginners

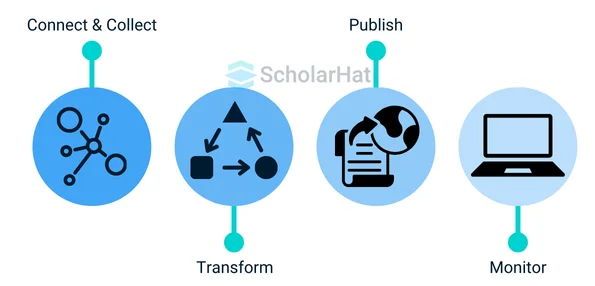

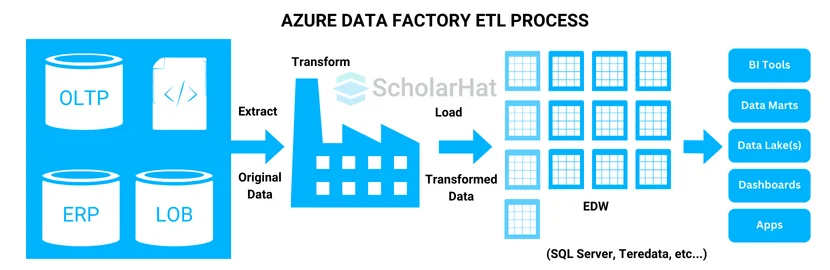

Azure Data Factory is a cloud-based data integration service from Microsoft that helps you move and manage data from different sources to a central location. It is mainly used to create data pipelines that collect, transform, and load data for reporting or analysis. This tool is important because it saves time, reduces manual work, and supports automation of data flows without writing complex code. Azure Data Factory plays a key role in data projects by helping businesses prepare clean and organized data for tools like Power BI, Azure SQL, or other analytics platforms.

In this Azure tutorial, we will study Azure Data Factory, including its role in data integration and orchestration, components, the Azure Data Factory pipeline, Data Transformation in Azure Data Factory, Scheduling and monitoring pipelines, and more. Entry-level cloud jobs with Azure skills pay up to $10,000 more annually. Start with our Free Azure Fundamental Training today!

Data Integration and Orchestration in Modern Businesses

- Data Integration is the process of collecting and combining data from different sources, like databases, files, applications, or cloud services, into a single, unified system. This makes it easier for businesses to access and use their data in one place. It helps ensure that data is accurate, consistent, and ready for analysis or reporting.

- Data Orchestration is about managing and controlling the movement of data, when it should move, how it moves, and where it goes. It involves scheduling, automating, and monitoring data workflows, so the right data is available at the right time in the right format. It acts like a smart manager that handles the flow of data between systems.

Azure Data Factory is used to easily connect, move, and transform data from different sources in one place. It automates data workflows, saving time and helping businesses get clean, ready-to-use data for analysis.

- Improved Customer Experience

- Innovation and Development

- Risk Mitigation

- Reduce Cost

- Enhanced Data Governance and Security

Microsoft Azure in Data Management

Azure plays a vital role in data management and offers a wide range of services and functionalities, as depicted below.

- Logical Integration

- Azure Data Factory (ADF) behaves as your manager, orchestrating data flow between various sources, such as databases, cloud storage, and even on-premises systems.

- Storage for All

- Azure Blob Storage for unstructured data and Data Lake Storage (ADLS) for enormous amounts of unstructured and semi-structured data. For relational data, the Azure SQL Database provides scalability and high availability.

- Unleash the Insights

- Azure Databricks brings the power of Apache Spark for large-scale data processing, while Azure Analysis Services lets you build insightful models for data visualization. Power BI then takes center stage, allowing you to create user-friendly dashboards and reports.

- Data Governance and Security First

- Azure keeps your data safe with Azure Active Directory (AAD)controlling access and Azure Purview managing your data assets across the environment and sharing the data securely.

- All-in-one Shop

- These are just a few of the data management services Azure offers. Together, they create a robust ecosystem, ensuring your data is integrated, stored, analyzed, governed, and secured within the trusted Microsoft cloud.

Read More: |

What is Azure Data Factory?

Azure Data Factory is a cloud-based data integration service by Microsoft that lets you collect, connect, and move data from different sources to a central location. It helps automate data workflows, making it easier to prepare data for analysis, reporting, or storage, all without writing complex code.

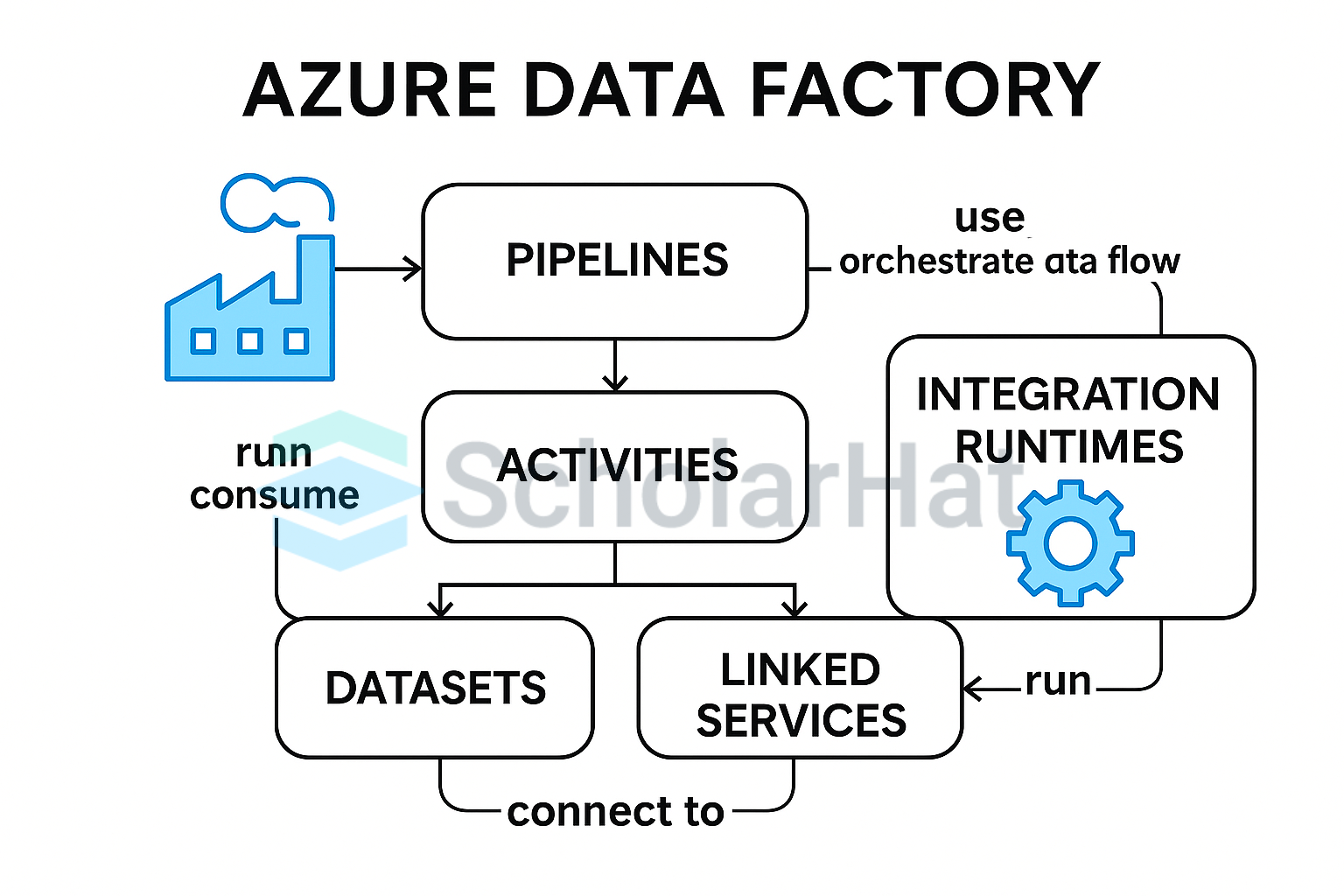

Azure Data Factory Architecture

Azure Data Factory architecture is based on pipelines, which include activities that move and transform data from source to destination. It uses components like pipelines, datasets, linked services, and triggers to build and manage end-to-end data workflows in the cloud.

Components of Azure Data Factory

Azure Data Factory relies on several key components working together to orchestrate data movement and transformation. The key components are depicted below:

1. Pipelines

- Pipelines act as the backbone of Azure Data Factory, visually representing the orchestrated flow of your data.

- It joins activities together, defining the sequence of data processing steps.

2. Activities

- Activities are individual tasks performed on your data. These can be associated with copying data from a source to a destination, transforming data using a script, or controlling the flow of the pipeline itself.

- It offers several activity types for data movement, transformation, and control.

3. Datasets

- Datasets define the structure of your data within the data stores. They act like blueprints, telling ADF how to interpret the data it interacts with.

- Depending on the data sources, there are different types of datasets, such as Azure Blob Dataset and Azure SQL Dataset.

4. Linked Services

- These establish the secure connections between ADF and external data stores and services.

- They provide the authentication details and configuration settings required for ADF to access your data sources and destinations.

5. Triggers

- These components handle the actual execution of pipelines and provide the environment where data movement and transformation activities run.

- Azure Data Factory offers different integration run-time options depending on your needs, such as self-hosted for on-premises data sources and Azure-hosted for cloud-based processing.

6. Control Flow

- It is an orchestration of pipeline activities that add chaining activities in a series, branching, defining parameters at the pipeline level, and passing arguments while executing the pipeline on-demand or from a trigger.

- We can use control flow to sequence certain activities and define the parameters that need to be passed for each one.

Create Azure Data Factory Step-by-Step

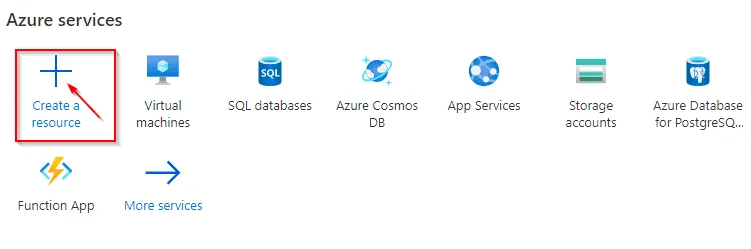

Step 1: First, we should go to the 'Azure Portal.'

Step 2: Then we should go to the portal menu and click on 'Create a resource.'

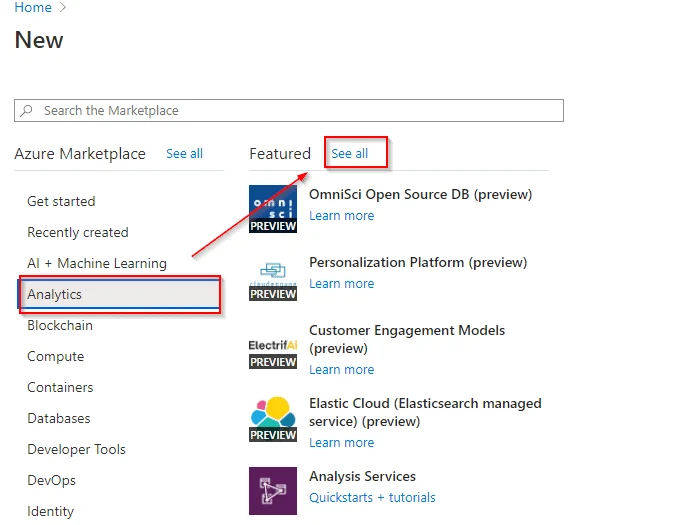

Step 3: After that, select Analytics, and then select See All.

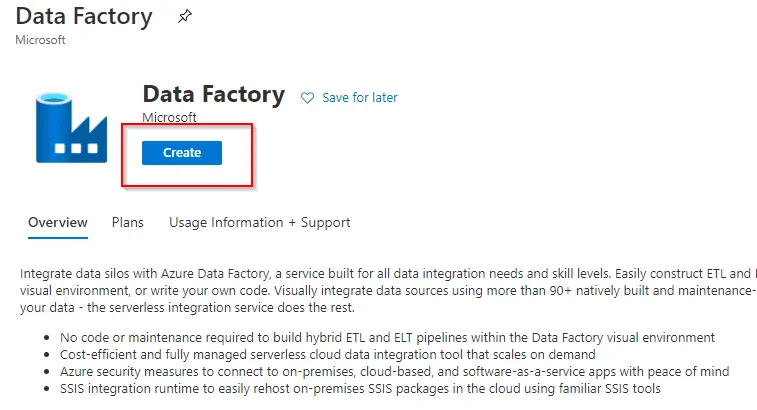

Step 4: Select Data Factory, and then select Create

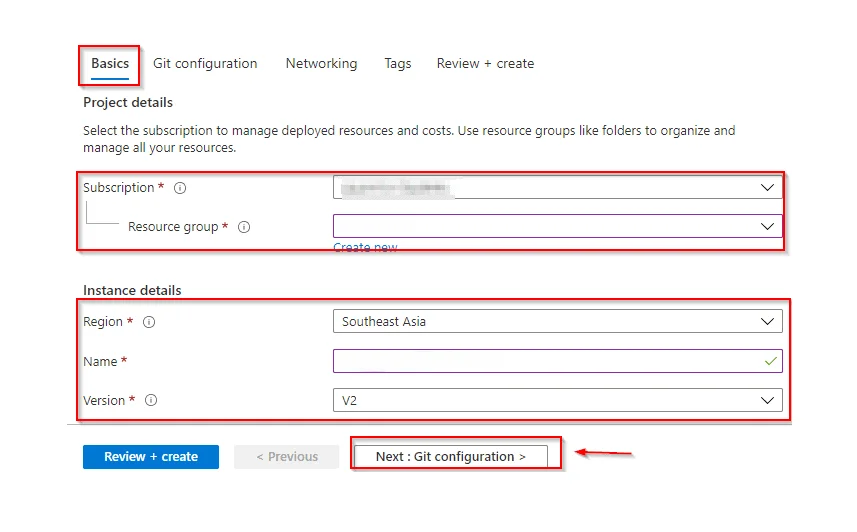

Step 5: On the Basics Details page, Enter the following details. Then, Select Git Configuration.

Step 6: On the Git configuration page, Select the Check the box, and then Go To Networking.

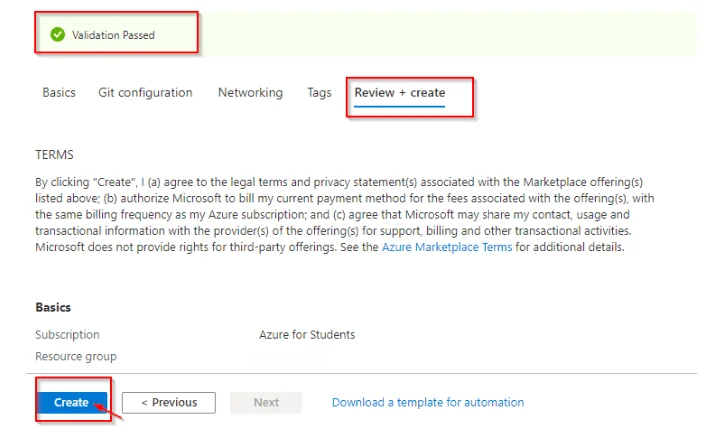

Step 7: On the Networking page, don’t change the default settings. Click on Tags and Select Create.

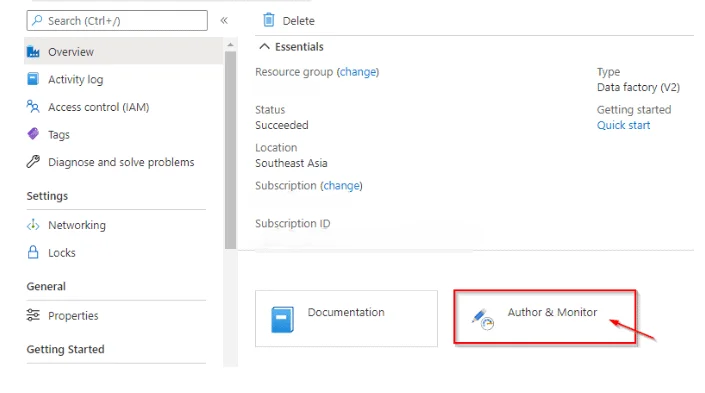

Step 8: We select Go to Resource and then Select Author & Monitor to launch the Data Factory UI in a separate tab.

What is Azure Data Factory Pipeline?

An Azure Data Factory pipeline is like a step-by-step instruction manual for turning chaos into something useful. It groups a series of tasks, like moving your data from boxes in the corner (storage locations) to a neat filing cabinet (database). The pipeline can even clean and organize your data along the way, sorting it like you might sort your toys.

You can choose to follow these steps one by one or tackle different parts of the room (data) simultaneously:

- The pipeline can even be set to run on a schedule, like cleaning your room every week, or it can be triggered by an event, like starting when you get new toys

- You can monitor the progress of the pipeline, just like checking if your room is clean and organized, to ensure everything is working smoothly.

- Azure Data Factory pipelines automate the process of cleaning, organizing, and managing your data, even if you're not a data pro.

How to Create Your First Azure Data Factory Pipeline

Here are some steps to create the first Azure Data Factory Pipeline that is followed by :

- Settings Up the Stages

- Log in to the Azure portal and search for "Create a resource."

- Under "Integration," choose "Data Factory."

- Provide a name, subscription, and resource group for your data factory.

- (Optional) Enable Git integration if you want version control for your pipelines.

- Once the details are filled in, create the data factory resource.

- Building Your Pipeline

- Go to your newly created data factory and navigate to "Author & Monitor" to launch the Data Factory Studio.

- Within the studio, click on "Create pipeline" to get started.

- Give your pipeline a descriptive name to quickly identify its purpose.

- Defining the Data Flow

- Pipelines consist of activities that tell ADF what to do with your data. A common first step is copying data, which can be done using the built-in copy activity.

- Configure the source and destination for the copy activity. This involves defining two key things: datasets and linked services.

- Datasets describe the structure of your data, like what columns it has and their data types.

- Linked services act as connections between ADF and your data storage locations (like Azure Blob Storage or SQL databases).

- Testing and Monitoring

- Once you've defined the activities within your pipeline, you can test it to see if it functions as expected. Run a test to identify any errors or unexpected behavior.

- ADF allows you to set up triggers to automate pipeline execution. This means you can schedule the pipeline to run at specific times or have it start when certain events occur.

- The pipeline's run history provides valuable insights. You can monitor it to track successful runs, identify errors, and troubleshoot any issues that may arise.

Connection Sources of Azure Data Factory

Azure Data Factory (ADF) connects to various sources where your data resides. This connectivity allows you to bring your data together from various locations for centralized management, processing, and analysis. Here's a breakdown of how ADF handles these connections.

- Extensive List of Supported Sources: Azure Data Factory provides a wide range of data sources, such as Cloud storage, Software as a Service (SaaS) Applications, Big data stores, and others.

- Pre-built Connectors for Streamlined Connections: Azure Data Factory offers over 90 built-in connectors that act as bridges to these various data sources. These connectors simplify the connection process by handling authentication (like logins) and data format specifics.

- Linked Services: Defining the Connection Details: The linked service specifies the data source type, authentication credentials (like passwords or API keys), and any other relevant connection properties needed for ADF to access your data.

Data Transformation Activity in Azure Data Factory

Azure Data Factory (ADF) transformation defines the process of cleaning, shaping, and manipulating your data before it's used for further analysis or loaded into a target destination. The functionalities of Data transformation in Azure Data Factory are below:

- Cleaning the data

- Create New data

- Filtering the data

- Combining or Summarizing data

Scheduling and Monitoring Pipelines

Scheduling Pipelines in Azure Data Factory

Triggers

Pipelines don't run on their own; you need to set up triggers to automate their execution. ADF offers various trigger options:

- Schedule Trigger: Run the pipeline at specific intervals or on a custom schedule.

- Event Trigger: Start the pipeline when a definite event occurs, like a new file coming in storage or a notification from another service.

- Tumbling Window Trigger: Execute the pipeline periodically for a defined timeframe (e.g., process data for each hour of the day).

- Webhook Trigger: Trigger the pipeline by sending an HTTP request from an external application.

Setting Up Triggers

When we are creating or editing a pipeline, you can go to the "Triggers" section and choose the desired trigger type. You'll then need to configure the trigger's specific settings, like the schedule for a scheduled trigger or the event for an event trigger.

Monitor Pipelines in Azure Data Factory

- Monitoring Interface: Azure Data Factory provides a visual interface to examine your pipeline runs. It shows a list of triggered pipeline runs, including their status (success, failed, running), start and end times, and any errors that may have occurred.

- Detailed Views: You can tab on a specific pipeline run to see detailed information as activity runs within the pipeline, execution logs, and data outputs.

- Alerts: Azure Data Factory permits you to set up alerts to be notified about pipeline failures or other critical events. You can construct email or webhook notifications to be sent to designated recipients.

Advantages of Using Azure Data Factory

- Connects to Multiple Data Sources: Azure Data Factory supports over 90+ data sources, including SQL Server, Excel, APIs, Azure Blob Storage, and more, making it easy to bring data from anywhere.

- Low-Code or No-Code Development: You don’t need to be an expert in programming to use it. You can build data pipelines using a simple drag-and-drop interface.

- Automated Data Workflows: You can schedule and automate tasks like data movement, transformation, and loading. This saves time and reduces manual errors.

- Cloud-Based and Scalable: Since it’s built on Azure, it can handle both small and large data workloads. It scales automatically based on your data needs.

- Integration with Azure Services: ADF works smoothly with other Microsoft services like Power BI, Azure SQL, and Azure Synapse, helping you build complete data solutions in one platform.

Conclusion

Azure Data Factory is a robust cloud-based data integration service that empowers organizations to orchestrate and automate their data pipelines efficiently. By providing a strong platform for data movement, transformation, and management, ADF simplifies complex data integration challenges.

80% of cloud developer jobs will demand AZ-204 skills. Secure your future with our Azure Certification for Developer!

FAQs

- Data Encryption

- Credential Management

- Network Security

- Access Control

- Monitoring and Auditing

Take our Azure skill challenge to evaluate yourself!

In less than 5 minutes, with our skill challenge, you can identify your knowledge gaps and strengths in a given skill.