18

AprData Ingestion in Machine Learning

Introduction

Data has become an essential resource for businesses looking to gather insightful knowledge and make wise decisions in the age of big data as well as advanced analytics. This data can be used to create algorithms and models that can make precise predictions and automate operations thanks to machine learning, a subfield of artificial intelligence. However, data needs to be fed into the system to make sure it is available, correctly formatted, and prepared for analysis before algorithms for machine learning can work their magic.

Data ingestion is the procedure of gathering, importing, & preparing data from multiple sources for use in machine learning operations. It entails gathering information from numerous sources, including databases, files, APIs, streaming platforms, and even other websites, and making it accessible in a format that is appropriate for analysis. Data ingestion is an important phase in the process of machine learning since the correctness, completeness, and quality of the ingested data directly impact the effectiveness and dependability of the models that are produced.

The variety of data sources is one of the main difficulties in data ingestion. Data in organizations are frequently dispersed throughout numerous systems, databases, & file types. Data that is structured, semi-structured, or unstructured must all be accommodated in data input processes in order to handle this diversity. Additionally, real-time data streams must be handled by data intake because many applications depend on continuous data ingestion and processing to provide up-to-date insights and support real-time decision-making.

Data cleansing & transformation are also essential components of data input. Machine learning models' accuracy may be negatively impacted by the existence of duplicates, errors, missing values, or other irregularities in raw data. As a result, in order to guarantee the quality and integrity of the data, it must be meticulously cleansed, standardized, and converted. Depending on the specific needs of the machine learning challenge at hand, this might involve activities like data deletion, outlier elimination, data type transformation, feature engineering, and more.

Data ingestion has improved in recent years thanks to developments in cloud computing as well as distributed systems. Technologies like Apache Kafka, Apache NiFi, & AWS Glue offer reliable frameworks for data input, making it possible to collect, transform, and integrate huge volumes of data from many sources. To ensure the dependability and effectiveness of the data import process, these solutions frequently contain the use of parallel processing, tolerance for faults, and data validation techniques.

Data Ingestion

Data collection, importation, and preparation for use as input by machine learning models and algorithms are referred to as "data ingestion" for machine learning. It entails gathering data from various sources, including files, databases, APIs, streaming platforms, and external websites, and converting it into a format appropriate for analysis & model training.

Making the data accessible, organized, and prepared for future processing is the main goal of data ingestion in machine learning. Data collecting, data cleansing, data transformation, as well as data integration are all included in this process. By taking these procedures, you can be sure that the data is reliable, consistent, and organized so that machine learning algorithms can use it efficiently.

Data intake is essential because the attributes of the input data have a big impact on the effectiveness and precision of machine learning models. Predictions may be skewed or erroneous if the ingested data is noisy, lacking, or inconsistent. In order to improve the quality and use of the data, data intake methods frequently include data preparation techniques such as data cleaning, normalization, feature extraction, and encoding.

Additionally, the scalability and effectiveness of the data processing process should be taken into account while ingesting data for machine learning. Having effective systems in place to manage massive datasets, live data streams, and dispersed data sources is crucial as the amount of data keeps increasing exponentially. These scaling issues are assisted by technologies including distributed computing frameworks, based on the cloud data input platforms, & stream processing systems.

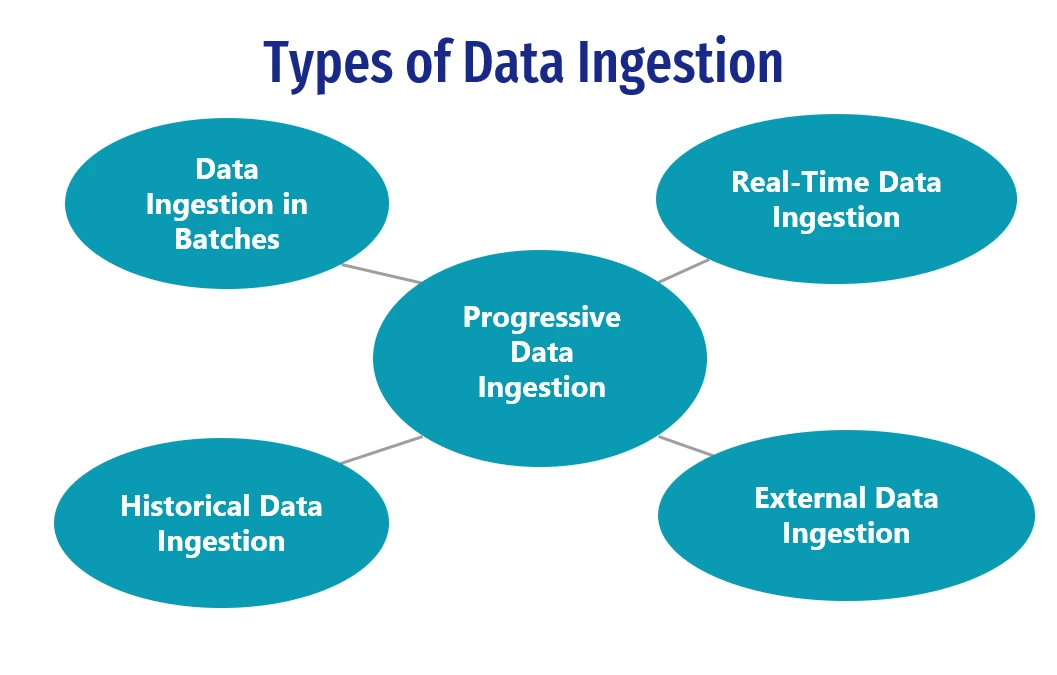

Types of Data Ingestion

Based on the qualities & sources of the data getting ingested, there are various categories into which data intake in machine learning can be divided. The following are some typical forms of data ingestion:

- Data Ingestion in Batches: Data is gathered and processed in small batches during batch data ingestion. It often entails importing and analyzing a sizable amount of static data from sources including log files, databases, data warehouses, and flat files. When offline processing or regular intervals of processing are sufficient and immediate processing is not necessary, batch data input is often used.

- Real-Time Data Ingestion: Real-time data ingestion is concerned with collecting and analyzing information as it comes in almost instantly. When quick processing and evaluation of data are necessary for time-sensitive applications, it is used. Streaming data from IoT devices, financial markets, social media feeds, sensors, and other sources can be ingested in real time. Real-time handling of massive, continuous data streams typically involves the use of tools such as Apache Kafka, Apache Flink, and AWS Kinesis.

- Progressive Data Ingestion: Progressive data ingestion refers to the process of adding new or updated data to an already-existing dataset. When additional data must be added to a previously processed dataset without repeating the entire dataset, it is frequently used. When working with huge datasets or when a steady stream of new data needs to be incorporated into the old dataset, this method is effective.

- Historical Data Ingestion: The process of integrating & incorporating historical data to the process of machine learning is referred to as historical data ingestion. When performing past analysis or when developing machine learning models that need a sizable amount of historical data for precise predictions, this kind of intake is helpful. Databases, data warehouses, old files, and other related sources are all possible places to find historical data.

- External Data Ingestion: Adding data from outside sources to the machine learning process is known as external data ingestion. Data from third-party APIs, open data sets, web scraping, and other external data suppliers can be included in this. External data import enables businesses to add more pertinent data to their existing datasets, improving the precision & durability of the models.

Data Ingestion Challenges

To assure the quality, dependability, as well as the effectiveness of the data process, there are a number of issues that can arise throughout the data import process in machine learning. Here are some typical data ingestion challenges:

- Data Complexity and Variety: Different types, forms, and structures of data, such as unstructured data, semi-structured data, and structured data are possible. Flexible data ingestion systems that can accept many data sources & formats are needed to handle this variability. To prepare unstructured data for machine learning algorithms, such as text or images, additional preprocessing, as well as feature extraction procedures may be necessary.

- Data Volume & Scalability: Due to the increasing volume of data, it is extremely difficult to manage and handle massive amounts of data effectively. Processes for ingesting data should be scalable as well as prepared to handle big data scenarios. Cloud-based technologies, distributed computing, and parallel processing can all be used to solve the scalability problem and speed up the efficient ingestion of large datasets.

- Cleaning and enhancing the data: Machine learning models can perform poorly when working with raw data because it frequently has noise, missing values, errors, or outliers. To find and address these problems, data cleansing, as well as quality control procedures, are required. To guarantee data integrity and accuracy, data cleaning methods like deduplication, outlier removal, & imputation approaches are used.

- Data Integration & Transformation: Merging data from many sources, that may have various schemas, formats, and data types, is frequently involved in the data ingestion process. When integrating and harmonizing these various datasets into a single format, data integration issues appear. For preparing the data for machine learning algorithms, further data transformation procedures like feature engineering, normalization, and encoding may be necessary.

- Real-Time Data Ingestion: Data ingestion needs to be able to manage continuous data streams as well as analyze data in close to real-time in circumstances where real-time and streaming data is involved. This calls for specialized software and hardware that can process and analyze data as it comes in while frequently adhering to strict latency specifications.

- Data Security & Privacy: When processing data, privacy and security issues must be taken into account. Data ingestion requires careful consideration of authentication procedures, secure data communication, & observance of data protection laws. To protect data privacy and confidentiality, sensitive and personally identifiable information (PII) should be handled with the proper precautions.

- Data governance & metadata management: For efficient data ingestion, it's crucial to establish sound data governance procedures and metadata management. Guarantee data traceability, provenance, & compliance, entails capturing information, tracking data lineage, and keeping data cataloging.

Summary

The process of gathering, importing, and processing data from diverse sources for analysis & model training is known as data intake in machine learning. It entails gathering data from various sources, including databases, files, APIs, and streaming platforms, and formatting it appropriately. The features of the imported data have a substantial impact on how well machine learning models function.

Data ingestion comes in many forms. Processing massive amounts of data at predetermined periods is known as batch data ingestion. Data is captured and processed in real-time during ingestion, frequently from streaming sources. New data is appended to an established dataset by incremental data input. Ingestion of historical data includes historical data for analysis or training. Collecting data from external sources is known as external data ingestion.

To handle a variety of data formats as well as structures, manage large data volumes, ensure data quality through cleaning and preprocessing, integrate and transform data from various sources, handle real-time data streams, address privacy and security concerns, and set up data governance as well as metadata management practices are just a few of the challenges associated with data ingestion.

Organizations use scalable technologies like cloud-based platforms and distributed computing to overcome these issues. They use technologies for real-time data processing, data integration techniques, and data cleaning techniques. To ensure compliance as well as data integrity, data security measures & metadata management procedures are put into place.

Generating accurate predictions and wise decisions requires effective machine learning, which can only be achieved with efficient data ingestion. Organizations may gain valuable knowledge from their data & promote innovation by overcoming these challenges.