Production in Machine Learning

Introduction

Machine learning (ML), which enables systems to learn from data and make wise judgments, has revolutionized a number of industries. However, the ML lifecycle as a whole includes much more than merely creating and training ML models. When models are incorporated into production systems and deployed, they may offer real-time predictions and insights, which is when the actual value of machine learning is realized.

Making trained machine learning models used in a production context is referred to as production in the field of machine learning. It entails the deployment, management, & maintenance of ML models to make sure they can offer forecasts or aid in decision-making processes in practical applications in an effective and reliable manner.

The production stage is crucial because it involves factors other than model performance and accuracy. It addresses difficulties with monitoring, security, scalability, dependability, and latency. Large-scale data handling, rapid prediction performance, the ability to adjust to changing situations, and seamless software system integration are all requirements for ML models.

What is a production ML system?

A production machine learning system (ML system) is one that is operational and deployed in a production environment, aiding decision-making processes or providing real-time forecasts. When trained models are incorporated into a production environment to produce value, it represents the culmination of the machine learning development process.

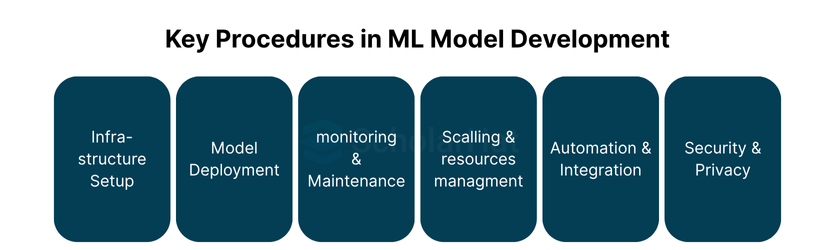

Key Procedures in ML Model Development

Packaging design: A trained ML model must be packaged in a way that makes it simple to deploy and run in a production setting. Models must typically be transformed into a serialized format that is appropriate for the platform or deployment framework of choice.

- Infrastructure Setup: The deployment and use of ML models require a reliable infrastructure. In order for the models to execute, servers, cloud instances, or packaged environments must be set up. Scalability, accessibility, and resource management are all important aspects of infrastructure.

- Model Deployment: After the necessary infrastructure has been put in place, the ML models are released into the real world. In this step, the deployment framework is configured, APIs or services are integrated, and the model is prepared to accept input data and make predictions.

- Monitoring and Maintenance: To make sure deployed models are operating at their best, ongoing monitoring is necessary. Monitoring entails keeping track of performance indicators, spotting abnormalities, and responding to mistakes or failures. To enhance performance and solve new difficulties, regular model upkeep & updates are also required.

- Scaling & resource management: As ML models are used and demanded more, scaling is required to meet increased workloads. This entails managing parallel processing, optimizing resource allocation, and making sure the models can manage high data volumes without performance deterioration.

- Automation and integration: Machine learning (ML) models are frequently a component of larger systems of software or workflows. Developing APIs, data streams, or real-time interfaces to collect inputs and provide predictions is necessary for integrating the models with current systems. The production process is streamlined, and less manual work is required thanks to the automation of model deployment and upgrades.

- Security and privacy: It's critical for protecting ML models & the data they handle. To prevent unauthorized access, breaches of data, or assaults on the deployed models, safety precautions should be put in place. Concerns about privacy must also be considered, especially when handling sensitive data.

What guarantees for production ML systems?

Organizations work to accomplish a number of assurances when it comes to production ML systems in order to ensure the system's dependability, performance, & overall effectiveness. These assurances consist of:

- Effectiveness and Scalability: Production ML systems should be built to process large amounts of data, serving predictions, and produce insights instantly. To handle rising workloads without compromising performance, they must be scalable. To guarantee the system is capable of handling the anticipated load efficiently, promises regarding response times, productivity, and resource usage are important.

- Reliability and Availability: Production ML systems should be extremely dependable and available, minimizing downtime or service interruptions. System uptime guarantees are something that businesses try to offer, making sure that the system works and is generally available to users. To achieve high availability, strategies including redundancy, fault tolerance, and load balancing are used.

- Accuracy & Quality: ML models put into production need to keep their predictions or insights at a high degree of accuracy and quality. The goal of organizations is to offer assurances regarding the performance of the models, such as acceptable error rates or accuracy limits. The models are continually checked and assessed to make sure they satisfy these quality criteria.

- Security and Privacy: Privacy and security requirements for production ML systems are very strict. The security and reliability of the data handled by the system are guaranteed by organizations. Implementing strong access controls, encryption systems, and safe communication protocols is part of this. Additionally crucial is adherence to privacy and data protection laws.

- Monitoring and Maintenance: Organisations agree to regularly monitor the functionality and state of production ML systems. They want to make sure that any anomalies, mistakes, or failures are quickly found and fixed. Performance is enhanced, problems are fixed, and security holes are addressed through routine maintenance and updates. Companies may promise response times for problem-solving or upgrades.

- Compliance & Governance: Production ML systems might have to follow rules or governance criteria that are particular to their sector. Organisations work to ensure that their systems adhere to compliance requirements including data governance, auditability, & accountability. Certain rules or standards important to the industry may be guaranteed to be followed.

- Support and documentation: Businesses strive to give producers of ML systems thorough guidance and assistance. This provides comprehensive documentation on model requirements, data pipelines, APIs, and system architecture. To respond to user inquiries, problems, or requests for assistance, support channels are set up. On the availability of self-service resources or response times to support inquiries, guarantees may be made.

Summary:

The deployment, administration, and upkeep of trained ML models in a production setting are all considered to be aspects of machine learning production. In addition to model development, it also emphasizes scalability, dependability, latency, monitoring, security, & system integration. A production machine learning system involves the operational execution of ML models that help decision-making or serve real-time forecasts.

Model packaging, infrastructure configuration, model installation, monitoring, maintenance, scalability, integration, and resolving security and privacy issues are all essential aspects in the production of ML models. To assure the efficiency and dependability of production ML systems, organizations work to obtain assurances in efficiency, adaptability, dependability, precision, privacy, safety, tracking, maintenance, compliance, and documentation.

Organizations may guarantee that the development of their ML systems can manage large data volumes, deliver real-time predictions that have sufficient accuracy, maintain stability and availability, safeguard sensitive data, and adhere to regulations by fulfilling these requirements. They also want to give users thorough documentation and support, responding quickly to questions and problems.