26

DecMicroservices Deployment Patterns

Issues you need to think about while deploying a Microservice:

Once you have created your Microservices you need to deploy them. In this article, we will see some of the issues we need to address. There are various forces or issues you need to think about while deploying a Microservice :

Services may be written using a variety of different languages, they can be using different frameworks or different versions of frameworks. For example, different versions of Java or Spring frameworks

Each service runtime consists of multiple service instances. For example, in an application, a piece of code called catalog service may have to run multiple copies during runtime for handling the load and high availability as we need as much remaining instances to take over and handle the load of the requests in case of failure of one instance.

The whole process of building and deploying the services need to be fast. In case some make a change, it needs to be automatically tested and deployed fast

Services need to be deployed and scaled independently, which is one of the primary motivations behind microservices.

Service instances need to be isolated from one another. In case one service is misbehaving ideally, we don’t want it to impact any other services

We should be able to constrain the resources a given service uses. We should be able to define how much CPU, Memory or bandwidth a particular service can use to avoid a single service eating up all the available resource

The whole process of deploying changes to the production needs to be reliable. That should be an easy, stress-free process that shouldn’t ever fail

Deployment should be cost-effective

There are various different patterns for deploying your services

| Enroll Now - Join our .NET Microservices Training and master scalable app development! |

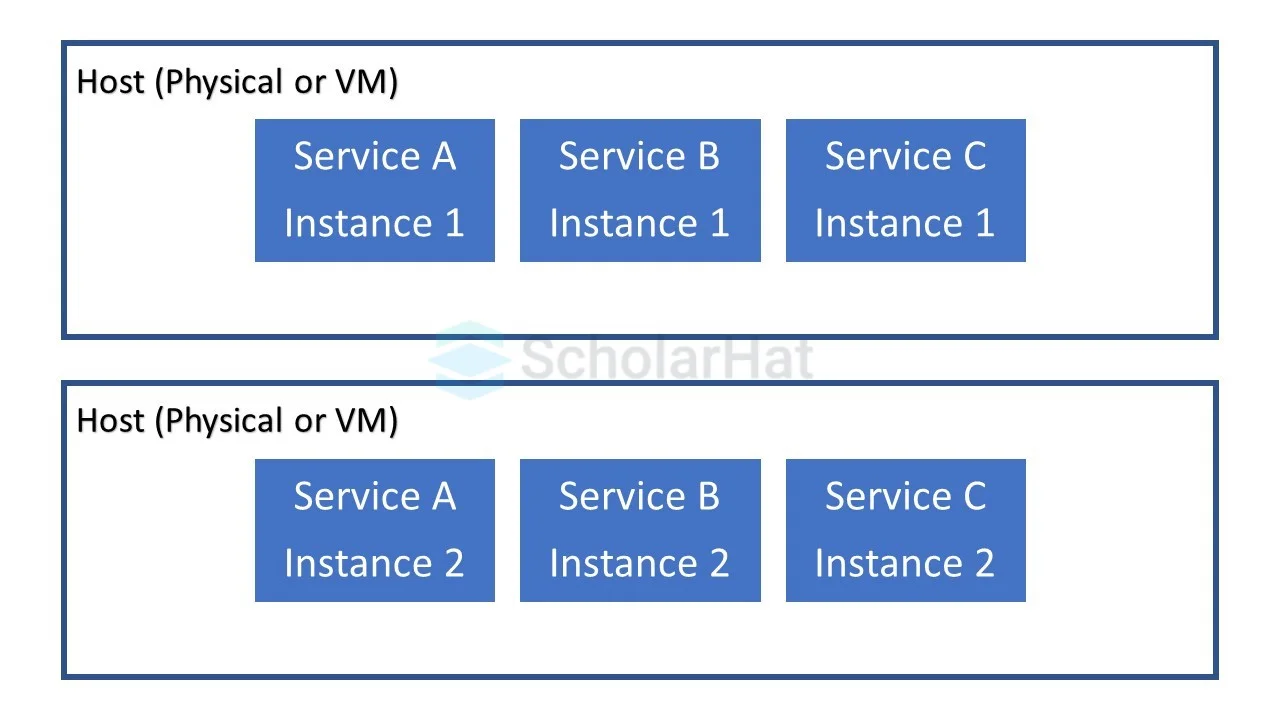

Multiple Service Instances Per Host

It is a fairly traditional approach where you have a machine which could be physical or virtual and you run multiple service instances on this host. Each service instance could be a process like JVM or Tomcat instance, or each process may be comprised of multiple service instances. For example, each service instance could be a var file and you run multiple var files on one tomcat Some of the benefits of using this pattern are

Efficient resource utilization: You have one machine with multiple services and the whole resource is shared between these different services

Fast deployment: In order to deploy a new version of your service you simply have to copy that to the machine and restart the process

You get little to no isolation between various service instances

Poor visibility on how the services are behaving like memory and CPU utilization

Difficult to constrain the resources a particular service can use

Risk of dependency version conflicts

Poor encapsulation of implementation technology. Whoever deploying the service has to have the detailed knowledge of the technology stack and mechanism used by each service

Some of the drawbacks of using this pattern are :

Service Instance Per Host

To have a greater isolation and manageability we can host a single service instance in a single host at the cost of resource utilization. This pattern can be achieved by two different ways: Service Instance per Virtual Machine and Service Instance per Container

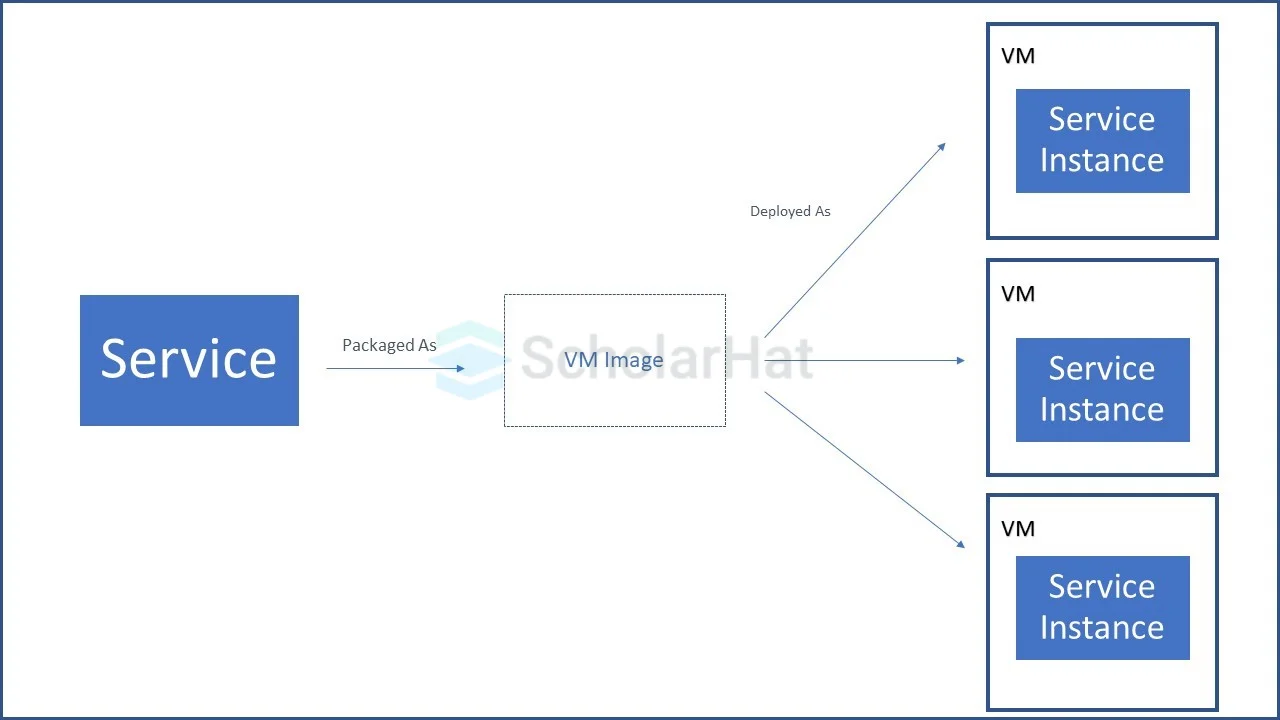

Service Instance Per VM

Here you package each service as a virtual machine image and deploying each instance as a virtual machine instance. One of the example is Netflix and their video service is consisting of more than 600 services with many instance of each service

Some of the benefits of packaging services as virtual machines are :

You get great isolation as the virtual machines are highly isolated with each other

You get greater manageability as virtual machine environment has great tools for management

Virtual machine encapsulates the implementation technology for the microservice. Once you package the service, the API for starting and stopping the service is your virtual machine interface. The deployment team need not to be knowledgeable about the implementation technology

With this pattern you can leverage cloud infrastructure for auto-scaling and load balancing

With virtual machines, we get less efficient resource utilization and thus increases the cost

The process of building a virtual machine image is relatively slow because of the volume of the data needed to be moved around the network and thus slows the deployment process

In some cases, it will take some time for the virtual machines to boot up

Some of the drawbacks of using this pattern are :

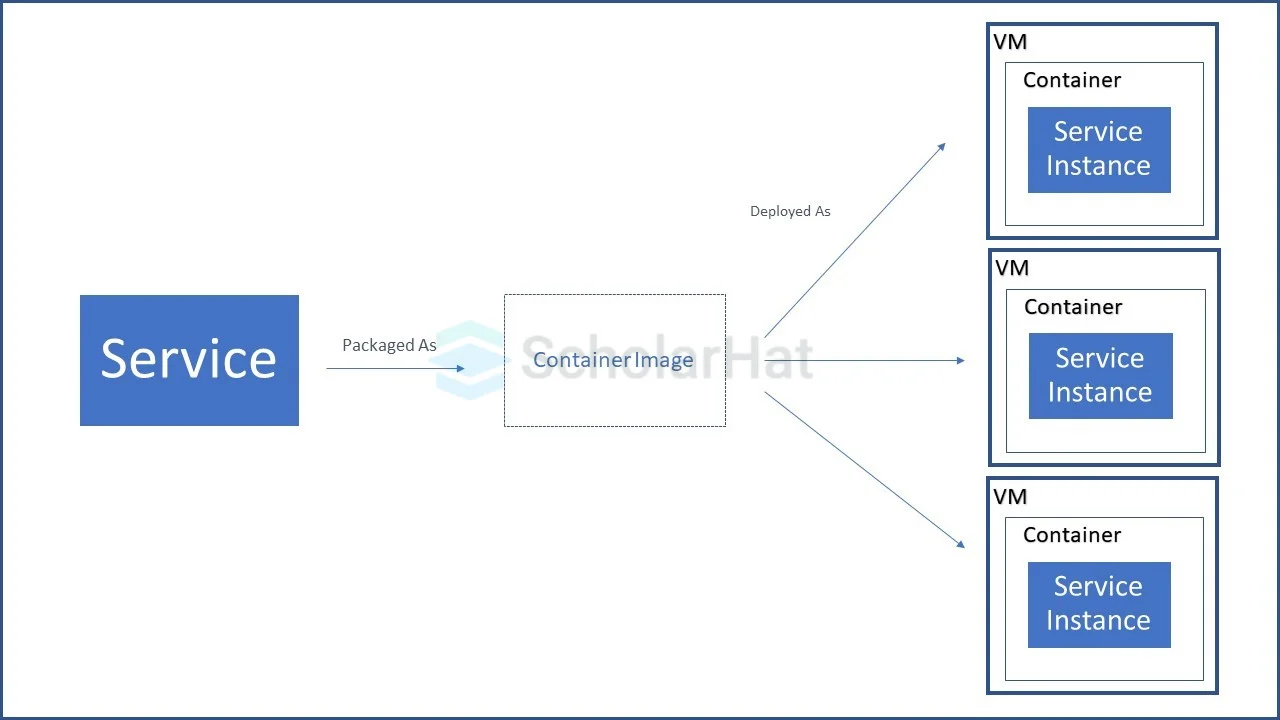

Service Instance Per Container

Here in this pattern the service is packaged as a container image and is deployed as running container where you can have multiple containers running on the same virtual machine. Some of the benefits of packaging services as container are :

Greater isolation as each container is highly isolated from each other at OS level

Greater manageability with containers

Container encapsulates the implementation technology

As containers are lightweight and sharing the same resources, it has efficient resource utilization

Fast deployments

As the container technology is new, it is not that much mature compared to virtual machines

Containers are not as secured as VMs

You can focus on your code and application and need not to be worried about the underlying infrastructure

You need not worry about scaling as it will automatically scale in case of load

You pay for what you use and not for the resources provisioned for you

Many constraints and limitations like limited languages support, suits better only for stateless services

Can only respond to request from a limited set of input sources

Only suited for applications that can start quickly

Chance of high latency in case of a sudden spike in load

Some of the drawbacks of packaging services as container are :

Serverless Deployment

The serverless deployment hides the underlying infrastructure and it will take your service’s code just run it. It is charged on usage on how many requests it processed and based on how much resources utilized for processing each request. To use this pattern, you need to package your code and select the desired performance level. Various public cloud providers are offering this service where they use containers or virtual machines to isolate the services which are hidden from you.

In this system, you are not authorized to manage any low-level infrastructure such as servers, operating system, virtual machines or containers. AWS Lambda, Google Cloud Functions, Azure Functions are few serverless deployment environments. We can call a serverless function directly using a web service request or in response to an event or via an API gateway or can run periodically according to cron-like schedule. Some of the benefits of serverless deployments are :

Some of the drawbacks of serverless deployments are :

Service Deployment Platform

This pattern uses a deployment platform, which is an automated infrastructure for application deployment. It provides a service abstraction, which is a name, set of highly available (e.g. load balanced) service instances. Some of the examples of this type are Docker swarm mode, Kubernetes, AWS Lambda, Cloud Foundry, and AWS Elastic Beanstalk

Summary

When deploying .NET microservices, you must consider factors like programming languages and frameworks, deployment requirements, resource usage, scaling, isolation, and monitoring. Learn how to choose the right deployment patterns, whether multiple service instances per host, service instance per VM, containerized deployment, or serverless platforms through our .NET Microservices Certification and Free.NET Microservices Course.

And you can transform your .NET skills into architect-level expertise, through our Software Architecture & Design Training. Also. consider enrolling in our Java Microservices course to master Spring Boot, REST APIs, and cloud-ready microservices.

Take our Microservices skill challenge to evaluate yourself!

In less than 5 minutes, with our skill challenge, you can identify your knowledge gaps and strengths in a given skill.