08

JanTop 50 Python Data Science Interview Questions and Answers

Python Data Science Interview

If you are getting into Data Science, Python is your best friend. Why? It's simple, powerful, and packed with libraries like NumPy, Pandas, and Scikit-learn that make data analysis, visualization, and machine learning easy.

Now, if you're preparing for a Python Data Science interview, expect a mix of Python fundamentals, data manipulation, statistics, and machine learning concepts. You might face questions like:

- How do you handle missing data in Pandas?

- What’s the difference between a list and a NumPy array?

- How does the groupby function work in Pandas?

- Explain the difference between shallow and deep copy in Python.

- What is broadcasting in NumPy?

These questions test your problem-solving skills and understanding data structures in Python. So, brush up on your Python basics, get comfortable with data manipulation, and practice writing efficient code. Python is the #1 skill for tech’s future. Don’t lag behind. Join our Free Python Course and code your way to success!

Python Data Science Interview Questions for Entry-Level (0-1 year experience)

Python Basics & Data Structures

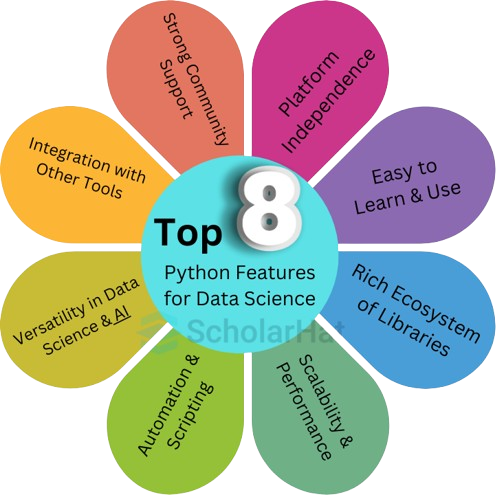

Q 1. What are Python’s key features that make it popular in data science?

1. Easy to Learn & Use

- Python has a simple syntax that’s close to natural language, making it beginner-friendly and easy to write, read, and debug.

2. Rich Ecosystem of Libraries

Python has a vast collection of pre-built libraries specifically for Data Science, including:

- NumPy – Numerical computing and arrays

- Pandas – Data manipulation and analysis

- Matplotlib & Seaborn – Data visualization

- Scikit-learn – Machine learning algorithms

- TensorFlow & PyTorch – Deep learning

3. Platform Independence

- Python is cross-platform, meaning you can run the same code on Windows, macOS, or Linux without modification.

4. Strong Community Support

- A large global community contributes to continuous improvements, vast documentation, and active forums like Stack Overflow and GitHub.

5. Integration with Other Tools

- Python integrates well with SQL databases, cloud platforms, and big data tools like Apache Spark, and Hadoop.

6. Versatility in Data Science & AI

- It supports data wrangling, statistical analysis, machine learning, deep learning, and automation, making it a one-stop solution for all Data Science needs.

7. Automation & Scripting

- Python is great for automating repetitive tasks such as data cleaning, web scraping, and ETL (Extract, Transform, Load) processes.

8. Scalability & Performance

- With optimizations like Cython, NumPy (vectorized operations), and multiprocessing, Python handles large-scale data efficiently.

Q2. What is the difference between a list and a tuple?

Difference Between List and Tuple in Python:| Feature | List | Tuple |

|---|---|---|

| Mutability | Mutable (can be changed) | Immutable (cannot be changed) |

| Syntax | list = [1, 2, 3] (square brackets) | tuple = (1, 2, 3) (parentheses) |

| Performance | Slower due to dynamic resizing | Faster due to fixed size |

| Memory Usage | Uses more memory | Uses less memory |

| Modification | Can add, remove, or modify elements | Cannot modify elements |

| Use Case | When data needs to change frequently | When data should remain constant (e.g., coordinates, configurations) |

Example:

1. List (Mutable)

my_list = [1, 2, 3]

my_list.append(4) # Allowed

print(my_list) # Output: [1, 2, 3, 4]

Output

[1, 2, 3, 4]

my_tuple = (1, 2, 3)

my_tuple[0] = 10 # TypeError: 'tuple' object does not support item assignment

Q3. How do you handle missing values in Pandas?

1. Detect Missing ValuesUse .isnull() or .notnull() to check for missing values.

import pandas as pd

data = {'A': [1, 2, None, 4], 'B': [None, 2, 3, 4]}

df = pd.DataFrame(data)

print(df.isnull()) # Returns True for missing values

print(df.notnull()) # Returns True for non-missing values Use .dropna() to remove rows or columns with missing values.

df.dropna() # Removes rows with NaN values

df.dropna(axis=1) # Removes columns with NaN values Use .fillna() to replace missing values.

df.fillna(0) # Replaces all NaNs with 0

df.fillna(df.mean()) # Replaces NaNs with column mean

df.fillna(method='ffill') # Forward fill (previous value)

df.fillna(method='bfill') # Backward fill (next value)

Use .replace() to replace specific missing values.

df.replace(to_replace=None, value=0, inplace=True) Use .interpolate() to estimate missing values based on available data.

df.interpolate(method='linear') # Linear interpolation Q4. Explain the difference between shallow copy and deep copy in Python.

In Python, copying an object can be done using shallow copy or deep copy:

- Shallow Copy (copy.copy() ): Creates a new object but does not create copies of nested objects. Instead, it references them.

import copy

list1 = [[1, 2], [3, 4]]

shallow_copy = copy.copy(list1)

shallow_copy[0][0] = 99

print(list1) # Output: [[99, 2], [3, 4]]

Output

[[99, 2], [3, 4]]

- Deep Copy (copy.deepcopy()): Creates a completely independent copy of the original object, including all nested objects.

deep_copy = copy.deepcopy(list1)

deep_copy[0][0] = 42

print(list1) # Output: [[99, 2], [3, 4]] Output

[[99, 2], [3, 4]]

Q5. What is the difference betweenis and ==in Python?

is checks memory reference, while == checks values.

a = [1, 2, 3]

b = a

c = [1, 2, 3]

print(a == c) # True

print(a is c) # False

print(a is b) # True

Q6. How does Python’s memory management work?

Python uses automatic garbage collection, reference counting, and memory pools.

import sys

x = [1, 2, 3]

print(sys.getrefcount(x))

Q7. What are Python’s built-in data types?

Python has various built-in data types, including:

- Numeric Types: int, float, complex

- Sequence Types: list, tuple, range

- Text Type: str

- Set Types: set, frozenset

- Mapping Type: dict

- Boolean Type: bool

- Binary Types: bytes, bytearray, memoryview

Q8. What is the difference between mutable and immutable data types?

1. Mutable: Can be changed after creation (e.g., list, dict, set).

lst = [1, 2, 3]

lst[0] = 99 # Allowed 2. Immutable: Cannot be changed after creation (e.g., int, str, tuple).

tup = (1, 2, 3)

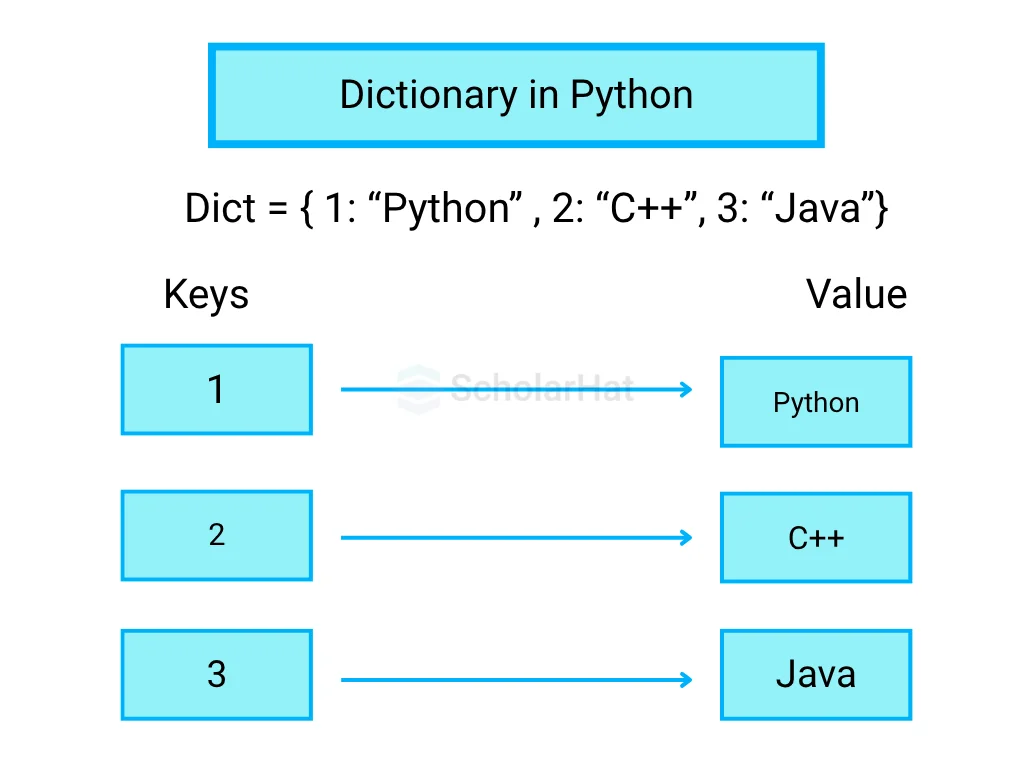

tup[0] = 99 # Error Q9. Explain the difference between a Python set and a dictionary.

1. Set: Unordered collection of unique elements.

s = {1, 2, 3, 4} 2. Dictionary: Dictionary in Python, Stores key-value pairs.

d = {"name": "Alice", "age": 25} Q10. What is the difference between a generator and a list comprehension?

1. List Comprehension: Returns a list, storing all elements in memory.

lst = [x**2 for x in range(5)]

print(lst) # [0, 1, 4, 9, 16] 2. Generator: Returns an iterator that generates values on the fly, saving memory.

gen = (x**2 for x in range(5))

print(next(gen)) # 0

print(next(gen)) # 1 Data Processing & Analysis

Q16. What are Pandas DataFrames, and how do they differ from Series?

- DataFrame: A 2D labeled data structure similar to a table with rows and columns.

- Series: A 1D labeled array, essentially a single column of a DataFrame.

import pandas as pd

s = pd.Series([10, 20, 30])

df = pd.DataFrame({"A": [10, 20, 30], "B": [40, 50, 60]})

Q17. How do you merge, join, and concatenate data in Pandas?

- merge(): Used for database-style joins (inner, outer, left, right).

- join(): Similar to merge() but works with index-based joins.

- concat(): Combines data along an axis.

df1 = pd.DataFrame({"A": [1, 2], "B": [3, 4]})

df2 = pd.DataFrame({"A": [5, 6], "B": [7, 8]})

result = pd.concat([df1, df2])

merged = df1.merge(df2, on="A", how="inner")

Q18. Explain the difference between .iloc[] and .loc[] in Pandas.

1. iloc[]: Selects rows/columns by integer location (index-based).

2. loc[]: Selects rows/columns by label (name-based).

df = pd.DataFrame({"A": [1, 2, 3], "B": [4, 5, 6]}, index=['x', 'y', 'z'])

print(df.iloc[0]) # First row using index

print(df.loc['x']) # First row using label

Q19. What are lambda functions in Python?

Lambda functions in Python are anonymous (one-liner) functions using the lambda keyword.

add = lambda x, y: x + y

print(add(3, 5)) # Output: 8 Output

8

Q20. How do you handle duplicate values in Pandas?

Use drop_duplicates()to remove duplicates.

df = pd.DataFrame({"A": [1, 2, 2, 3], "B": [4, 5, 5, 6]})

df = df.drop_duplicates()

Q21. What is the role of the apply() function in Pandas?

Applies a function to rows or columns.

df["A_squared"] = df["A"].apply(lambda x: x**2) Q22. How do you optimize performance in Pandas for large datasets?

- Use vectorized operations.

- Convert data types to save memory.

- Use chunk processing for large files.

df["A"] = df["A"].astype("int8")

Q23. Explain the difference between .any() and .all() functions in Pandas.

.any() .all()

df = pd.DataFrame({"A": [True, False, True], "B": [True, True, True]})

print(df.any()) # Checks if any value is True in each column

print(df.all()) # Checks if all values are True in each column

Q24. How do you convert categorical data into numerical form?

Use pd.get_dummies()for one-hot encoding.

df = pd.DataFrame({"Color": ["Red", "Blue", "Green"]})

df_encoded = pd.get_dummies(df, columns=["Color"])

Q25. What is the difference between .pivot() and .pivot_table() in Pandas?

.pivot() reshapes data but requires unique index/column combinations. .pivot_table() works even with duplicate values and allows aggregation.

df = pd.DataFrame({"Date": ["2024-01", "2024-01", "2024-02"],

"Category": ["A", "B", "A"],

"Value": [100, 200, 150]})

pivot = df.pivot(index="Date", columns="Category", values="Value")

pivot_table = df.pivot_table(index="Date", columns="Category", values="Value", aggfunc="sum")

Machine Learning & Statistical Computing

Q26. What are the different types of probability distributions used in Data Science?

Some common probability distributions include Normal, Binomial, Poisson, Exponential, and Uniform distributions.

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm

x = np.linspace(-3, 3, 100)

plt.plot(x, norm.pdf(x))

plt.title("Normal Distribution")

plt.show()

Q27. Explain the concept of feature scaling in machine learning.

Feature scaling ensures numerical features are on the same scale using Standardization or Min-Max Scaling.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

scaled_data = scaler.fit_transform([[10], [20], [30]])

Q28. How does NumPy handle multidimensional arrays?

NumPy uses

ndarray

import numpy as np

arr = np.array([[1, 2, 3], [4, 5, 6]])

print(arr.shape) # (2,3)

print(arr[:, 1]) # Second column

Q29. What is the difference between mean, median, and mode?

Mean is the average, Median is the middle value, and Mode is the most frequent value.

import numpy as np

from scipy import stats

data = [1, 2, 2, 3, 4]

print(np.mean(data)) # Mean

print(np.median(data)) # Median

print(stats.mode(data)) # Mode

Q30. How do you implement linear regression using NumPy?

import numpy as np

X = np.array([1, 2, 3, 4, 5])

y = np.array([2, 4, 6, 8, 10])

A = np.vstack([X, np.ones(len(X))]).T

m, c = np.linalg.lstsq(A, y, rcond=None)[0]

print(f"Slope: {m}, Intercept: {c}")

Q31. Explain the difference between supervised and unsupervised learning.

Supervised learning uses labeled data, while Unsupervised learning finds patterns without labels.

Q32. How do you calculate the correlation between two variables in Python?

import pandas as pd

data = {"A": [1, 2, 3, 4], "B": [2, 4, 6, 8]}

df = pd.DataFrame(data)

print(df.corr())

Q33. What is the purpose of the scipy.stats module?

The scipy.statsmodule provides statistical functions like probability distributions and hypothesis testing.

from scipy import stats

data = [1, 2, 3, 4, 5]

print(stats.ttest_1samp(data, 3)) # One-sample t-test

Q34. How do you handle imbalanced datasets in Python?

Methods include oversampling (SMOTE), undersampling, and class weighting.

from imblearn.over_sampling import SMOTE

X, y = [[1], [2], [3], [4]], [0, 0, 1, 1]

smote = SMOTE()

X_resampled, y_resampled = smote.fit_resample(X, y)

Q35. How do you implement a k-means clustering algorithm using Python?

from sklearn.cluster import KMeans

import numpy as np

X = np.array([[1, 2], [1, 4], [5, 8], [8, 8]])

kmeans = KMeans(n_clusters=2)

kmeans.fit(X)

print(kmeans.labels_)

print(kmeans.cluster_centers_)

Python Data Science Interview Questions for Advanced-Level (5+ years experience)

Performance Optimization & Best Practices

Q36. How do you handle memory-efficient operations in NumPy?

To optimize memory usage in NumPy:

- Use appropriate data types (e.g., int8 instead of int64).

- Leverage views instead of copies.

- Use in-place operations to reduce memory overhead.

- Utilize NumPy’s broadcasting to avoid large intermediate arrays.

import numpy as np

arr = np.array([1, 2, 3, 4], dtype=np.int8)

arr += 2 # Memory-efficient operation Q37. What are some techniques to speed up Pandas operations?

- Use vectorized operations instead of loops.

- Convert object data types to categorical for efficiency.

- Use chunk processing for large datasets.

- Enable multi-threading with modin.pandas.

df = pd.DataFrame({"A": [1, 2, 3, 4]})

df["A"] = df["A"] * 2 # Faster than using apply()

Q38. How does Python’s Global Interpreter Lock (GIL) impact performance?

The Global Interpreter Lock (GIL) restricts execution to one thread at a time, meaning:

- CPU-bound tasks do not benefit from multithreading.

- I/O-bound tasks can still benefit from multithreading.

- Multiprocessing is better for CPU-heavy tasks.

import threading

def compute():

sum([i**2 for i in range(1000000)])

threads = [threading.Thread(target=compute) for _ in range(4)]

for t in threads: t.start()

for t in threads: t.join() Q39. Explain multithreading vs multiprocessing in Python.

| Feature | Multithreading | Multiprocessing |

|---|---|---|

| Execution | Multiple threads in a single process | Multiple separate processes |

| Best For | I/O-bound tasks | CPU-bound tasks |

| GIL Effect | Affected | Not affected |

| Memory Usage | Shares memory space | Each process has separate memory |

Example: Multithreading (I/O-bound task)

import threading, time

def task():

time.sleep(2)

print("Task complete")

threads = [threading.Thread(target=task) for _ in range(5)]

for t in threads: t.start()

for t in threads: t.join()

Example: Multiprocessing (CPU-bound task)

import multiprocessing

def compute():

sum([i**2 for i in range(1000000)])

processes = [multiprocessing.Process(target=compute) for _ in range(4)]

for p in processes: p.start()

for p in processes: p.join()

Q40. How do you parallelize computations in Python?

Python provides several ways to parallelize computations:

- Usefor CPU-bound tasks.

multiprocessing - Usefor efficient parallelism.

concurrent.futures - Usefor parallel loops.

joblib - Usefor parallel Pandas-like operations.

Dask

Example: Parallel processing with concurrent.futures

import concurrent.futures

def compute(n):

return sum(i**2 for i in range(n))

with concurrent.futures.ProcessPoolExecutor() as executor:

results = executor.map(compute, [1000000, 2000000, 3000000])

print(list(results)) Deep Learning & Advanced Machine Learning:

Q41. What are the different ways to preprocess text data for NLP?

Text preprocessing includes:

- Tokenization

- Lowercasing

- Removing Stopwords

- Stemming and Lemmatization

- Removing Punctuation

- Vectorization (TF-IDF, Bag-of-Words, Word Embeddings)

import nltk

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

nltk.download("punkt")

nltk.download("stopwords")

text = "Natural Language Processing is amazing!"

tokens = word_tokenize(text.lower())

clean_tokens = [word for word in tokens if word not in stopwords.words("english")]

print(clean_tokens)

Q42. How do you implement a decision tree from scratch in Python?

Steps:

- Calculate Gini Impurity or Entropy

- Choose the best feature to split the dataset

- Recursively build the tree

import numpy as np

import pandas as pd

from sklearn.tree import DecisionTreeClassifier

# Sample dataset

data = {'Feature': [0, 1, 1, 0, 1], 'Label': [0, 1, 1, 0, 1]}

df = pd.DataFrame(data)

clf = DecisionTreeClassifier(criterion="gini")

clf.fit(df[['Feature']], df['Label'])

print(clf.predict([[1]]))

Q43. Explain the role of TensorFlow and PyTorch in Deep Learning.

| Feature | TensorFlow | PyTorch |

|---|---|---|

| Developed By | ||

| Computation Graph | Static & Dynamic | Dynamic |

| Best For | Production | Research |

import torch

import torch.nn as nn

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(2, 1)

def forward(self, x):

return self.fc1(x)

model = SimpleNN()

print(model)

Q44. How does a convolutional neural network (CNN) process images?

Key CNN layers:

- Convolutional Layer - Extracts features

- ReLU - Activation function

- Pooling Layer - Reduces dimensionality

- Fully Connected Layer - Classification

from tensorflow import keras

from tensorflow.keras import layers

model = keras.Sequential([

layers.Conv2D(32, (3,3), activation='relu', input_shape=(28,28,1)),

layers.MaxPooling2D((2,2)),

layers.Flatten(),

layers.Dense(10, activation='softmax')

])

model.summary()

Q45. What are hyperparameter tuning techniques in Python?

Common methods:

- Grid Search

- Random Search

- Bayesian Optimization

- HyperOpt & Optuna

- Genetic Algorithms

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

param_grid = {'n_estimators': [10, 50, 100], 'max_depth': [3, 5, None]}

clf = GridSearchCV(RandomForestClassifier(), param_grid, cv=5)

clf.fit(X_train, y_train)

print(clf.best_params_)

Big Data & Scalability:

Q46. How do you work with large datasets that don’t fit in memory?

- Use Chunking in Pandas

- Utilize Dask for parallel processing

- Store data in Databases instead of memory

- Use Apache Spark for distributed computing

import pandas as pd

chunk_size = 10000

for chunk in pd.read_csv('large_dataset.csv', chunksize=chunk_size):

process(chunk)

Q47. What are some Python libraries for handling big data?

- Pandas

- Dask

- Vaex

- PySpark

- Modin

import dask.dataframe as dd

df = dd.read_csv("large_dataset.csv")

df.groupby('column_name').mean().compute()

Q48. Explain how Apache Spark integrates with Python (PySpark).

PySpark is the Python API for Apache Spark, enabling distributed computing.

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("BigDataProcessing").getOrCreate()

df = spark.read.csv("large_dataset.csv", header=True, inferSchema=True)

df.show()

Q49. How do you deploy machine learning models using Flask or FastAPI?

1. Using Flask:

from flask import Flask, request, jsonify

import pickle

app = Flask(__name__)

model = pickle.load(open("model.pkl", "rb"))

@app.route('/predict', methods=['POST'])

def predict():

data = request.json['features']

prediction = model.predict([data])

return jsonify({'prediction': prediction.tolist()})

if __name__ == "__main__":

app.run(debug=True)

from fastapi import FastAPI

import pickle

app = FastAPI()

model = pickle.load(open("model.pkl", "rb"))

@app.post("/predict/")

async def predict(features: list):

prediction = model.predict([features])

return {"prediction": prediction.tolist()}

Q50. How do you schedule and automate data pipelines in Python?

Common tools for scheduling data pipelines:

- Apache Airflow

- Luigi

- Cron Jobs

- Perfect

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime

def process_data():

print("Data Processing Task Executed!")

dag = DAG('data_pipeline', schedule_interval='@daily', start_date=datetime(2024, 1, 1))

task = PythonOperator(task_id='process_task', python_callable=process_data, dag=dag)

| Download this PDF Now - Python Interview Questions PDF By ScholarHat |

In this article, we covered fundamental and advanced Python interview questions and answers for data science related to data handling, memory management, deep learning, and big data technologies like Apache Spark and PySpark. Understanding these topics will not only help you ace interviews but also enable you to build efficient and scalable data-driven applications.

Full-Stack Python Developers earn 50–70% more than basic coders. Join our Full-Stack Python Developer Training and skyrocket your salary!

Crack Your Next Python Interview – Grab the Free Expert eBook!

Crack Your Next Python Interview – Grab the Free Expert eBook!Python Interview Questions and Answers Book Unlock expert-level Python interview preparation with our exclusive eBook! Get instant access to a curated collection of real-world interview questions, detailed answers, and professional insights — all designed to help you succeed.

No downloads needed — just quick, free access to the ultimate guide for Python interviews. Start your preparation today and move one step closer to your dream job!.

FAQs

Python offers several libraries for data science, including:

- Pandas (for data manipulation and analysis)

- NumPy (for numerical computing)

- Matplotlib & Seaborn (for data visualization)

- Scikit-Learn (for machine learning)

- TensorFlow & PyTorch (for deep learning)

- Dask & PySpark (for big data processing)

- Shallow Copy creates a new object but references the original elements. Changes in one affect the other.

- Deep Copy creates a completely independent copy, ensuring changes do not affect the original object.

- Use vectorized operations with NumPy instead of loops.

- Optimize memory usage with astype() in Pandas.

- Use list comprehensions instead of traditional loops.

- Utilize multiprocessing for parallel computing.

Take our Python skill challenge to evaluate yourself!

In less than 5 minutes, with our skill challenge, you can identify your knowledge gaps and strengths in a given skill.