25

AprData Collection & Management in Data Science

Introduction

Any research or business effort that is effective must start with data collection, and one of the key domains where this becomes vital is in Data Science. Learn Data Science offers the groundwork for data analysis, insights, and well-informed choices. The ability to successfully gather, manage, and analyze data is essential in the field of data science. This article examines the fundamental practices in data collection, emphasizing the value of data gathering for both research and business. It comprehends various data collection techniques, typical data sources, and recommended practices for data science certification training, as well as data management and preservation. These are all crucial aspects to master when venturing into the world of Data Science.

Data Source

A data source is a place or origin from which data is received in the context of data management and collecting in data science. A database, website, API, sensor, or any other platform or system that produces or stores data can be a data source. To obtain the information required for analysis and decision-making processes, data scientists locate and access pertinent data sources.

Data collection in Data Science:

In data science, data collection is the first step in the data science process. The goal of data collection is to gather the data that is needed to answer the research question or to solve the problem that the data science project is trying to address. Here are several key points to consider regarding data collection in data science:

- Data collection is essential for both business and research.

- Data collecting helps in the gathering of information, the testing of hypothesis, and the production of relevant findings.

- It enables scientists to find correlations, trends, and patterns that lead to important discoveries.

- In business, data collection offers insights into consumer behavior, market trends, as well as operational effectiveness.

- It enables businesses to streamline operations and make data-driven decisions.

- A competitive advantage in the market is provided by data collection.

Read More - Data Science Interview Questions And Answers

Understanding different methods of Data collection in Data Science:

- Surveys and Questionnaires: In a survey, a sample population is questioned about a set of predetermined questions to gather data. This approach helps acquire demographic data as well as subjective preferences and opinions. Online questionnaires, telephone interviews, and in-person interviews are all options for conducting surveys.

- Observational Studies: In observational studies, information is gathered by directly observing and documenting occurrences, actions, or events. In disciplines like anthropology, psychology, and the social sciences, this approach is frequently employed. Field observations, videotapings, or already-existing records and documentation are all methods for gathering observational data.

- Experiments: Experiments entail changing factors to examine how they affect a desired outcome. By contrasting a control group with one or more experimental groups, data are gathered. Researchers can establish cause-and-effect links using this technique. Experiment data might be gathered in controlled lab environments or real-world situations.

- Interviews: Individuals are interviewed one-on-one or in groups to collect data. Interviews can be pre-planned with a series of questions or left unplanned to allow for free-flowing discussions. This approach works well for obtaining in-depth knowledge, insights, & qualitative data.

- Web scraping: This technique automatically extracts data from websites. Large amounts of organized or unstructured data can be gathered using a variety of web sources. Programming expertise and commitment to moral and legal standards are required for web scraping.

- Sensor data collection: Data from real-world objects or settings is gathered using sensors. Examples include heart rate monitors, accelerometers, temperature sensors, and GPS trackers. In industries including the Internet of Things, healthcare, and environmental monitoring, sensor data collection is common.

- Social Media Monitoring: As social media platforms have grown in popularity, researchers are gathering information from sites like Twitter, Facebook, and Instagram to analyze trends, attitudes, and general public opinion. This approach aids in the comprehension of user behavior and social dynamics.

- Existing Databases & Records: Information may be gathered from historical archives, databases, or records that are already in existence. This technique is time- and money-efficient, especially when working with huge datasets. Government information, client databases, & medical records are a few examples.

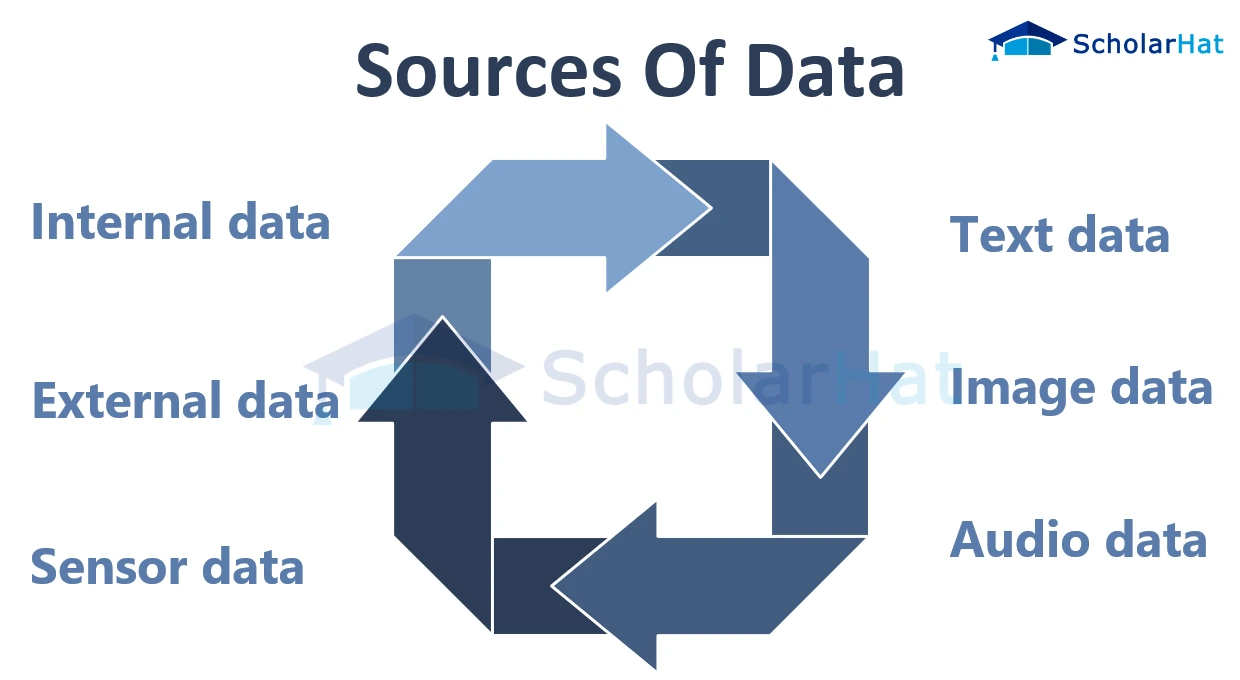

Sources of data

- Internal data: This refers to information gathered within a company, such as financial, customer, or sales data.

- External data: This refers to information gathered from sources outside of an organization, such as the government, social media, or the weather.

- Sensor data: This refers to information gathered through sensors, such as GPS, temperature, or heart rate readings.

- Text data: This is information gathered from written materials like news stories, social media posts, and product reviews.

- Image data: This is information gathered from visual sources like pictures, x-rays, or satellite images.

- Audio data: This is information that has been gathered from audio sources like voice, music, or noises in the environment.

Using APIs for data collection

- In the age of technology, APIs (Application Programming Interfaces) are helpful for data acquisition.

- They give programmers access to and retrieval of data from various web sources.

- APIs give data scientists the ability to collect real-time data and use it in their study.

- They simplify data-collecting procedures by offering a standardized way of gaining access to and transferring data.

- By using APIs to automate data collection, data accuracy, and consistency are improved.

- When using APIs for data collecting, it is important to take ethical issues and practical constraints into account.

Data Exploration in Data Science

Data science requires exploration and data correction to assure the accuracy and dependability of the data used for analysis. Key components of data exploration in data science and correction include the following:

- Data Cleaning: Data cleaning entails locating and correcting problems with the acquired data, such as missing numbers, duplication, inconsistencies, and errors. This procedure could involve filling in blanks, getting rid of duplicates, standardizing formats, and fixing mistakes.

- Data Validation: To verify the obtained data's accuracy and integrity, it is crucial to validate it. This can be achieved by running many tests, including cross-validating the data with data from other sources, completing range checks, and comparing the data to predetermined rules.

- Data Transformation: Data transformation is the process of transforming the gathered data into a format that is appropriate for analysis. Aggregating data, normalizing variables, scaling numbers, or using mathematical processes to create new features or indications are some examples of this.

- Exploratory Data Analysis (EDA): To obtain insights, spot trends, spot outliers, and comprehend the underlying distribution & properties of the data, EDA entails analyzing and visualizing the collected data. EDA aids in assessing data quality and locating any potential problems or anomalies.

- Missing data management: Analysis outcomes can be severely impacted by missing data. There are many ways to deal with missing data, including imputation approaches (such as mean, median, and regression imputation) and algorithms that can deal with missing values directly.

- Identifying outliers: Outliers are data points that drastically vary from predicted trends. Outliers must be recognized and handled carefully since they might skew the outcomes of the analysis. Statistical techniques or the creation of domain-specific thresholds can both be used to identify outliers.

- Feature engineering: To increase the predictive capacity of the data, feature engineering entails the creation of new variables or the transformation of existing variables. This procedure could involve developing interaction terms, calculating statistical measures, or properly encoding categorical variables.

- Data Documentation: For reproducibility and transparency, the investigation and fixing process should be documented. It aids in keeping track of the actions completed, choices made, and presumptions made during the data preparation and cleaning phase.

- Iterative Process: Data exploration and correction is frequently an iterative process. It entails assessing how data cleansing and transformation methods have affected the study's findings, making any necessary adjustments to the strategy, and repeating the procedure until the data is deemed ready for analysis.

Data storage and management in data science

- Choosing the right storage technologies: Data scientists must choose the right storage technologies based on the volume, structure, and accessibility requirements of the data. These include databases (SQL or NoSQL), data lakes, and cloud storage.

- Implementing efficient data management strategies: Putting effective data management strategies into practice entails structuring data, establishing data governance practices, guaranteeing data quality, and putting data backup and recovery procedures into practice to keep the data's integrity and availability.

Best practices for data collection and management

- Establish definite goals for data collection.

- Create a well-thought-out strategy for data collection.

- Maintain data quality by validating and cleansing it.

- For consistency, use standardized formats.

- Ensure the privacy and security of data.

- Data-collecting methods should be documented for transparency.

- For accountability and control, use data governance.

- Securely backup and save data.

- Establish data lifecycle management and data retention.

- Review and modify data management procedures frequently.